11.4 Tool: Lime

Very well-known tool Local Interpretable Model-agnostic Explanations (LIME) explains prediction for specific input. Details are given in the original paper “Why Should I Trust You?” Explaining the Predictions of Any Classifier (Tulio Ribeiro, Singh, and Guestrin 2016). The code is given at GitHub along with installation guide and links to tutorial.

Local Interpretable Model-agnostic Explanations (LIME):

- Works for

- images

- text

- tabular data

- Model agnostic, i.e. works for any model

- Neural networks

- Support Vector Machines (SVM)

- Tree based algorithms

- …

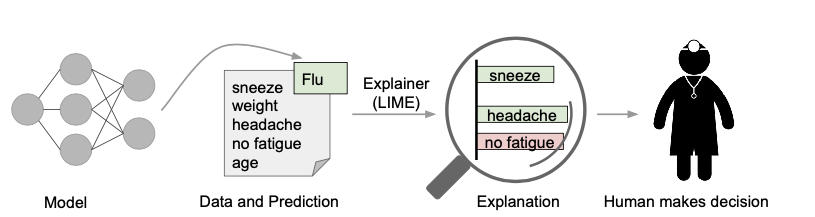

Explaining individual predictions. A model predicts that a patient has the flu, and LIME highlights the symptoms in the patient’s history that led to the prediction. Sneeze and headache are portrayed as contributing to the “flu” prediction, while “no fatigue” is evidence against it. With these, a doctor can make an informed decision about whether to trust the model’s prediction. Figure from (Tulio Ribeiro, Singh, and Guestrin 2016)

Kevin Lemagnen gave an excellent presentation at 2019s PyData in New York Open the Black Box: an Introduction to Model Interpretability with LIME and SHAP - Kevin Lemagnen were he lists as main points how LIME works as follows:

LIME - How does it work?

- Choose an oberseration to explain

- Create new dataset around observation by sampling from distribution learnt on training data

- Calculate distances between new points and observation, that’s our measure of similarity

- Use model to predict class of new points

- Find the subset of m features that has the strongest relationship with our target class

- Fit a linear model on fake data in m dimensions weighted by similarity

- Weight of linear model are used as explanation of decision

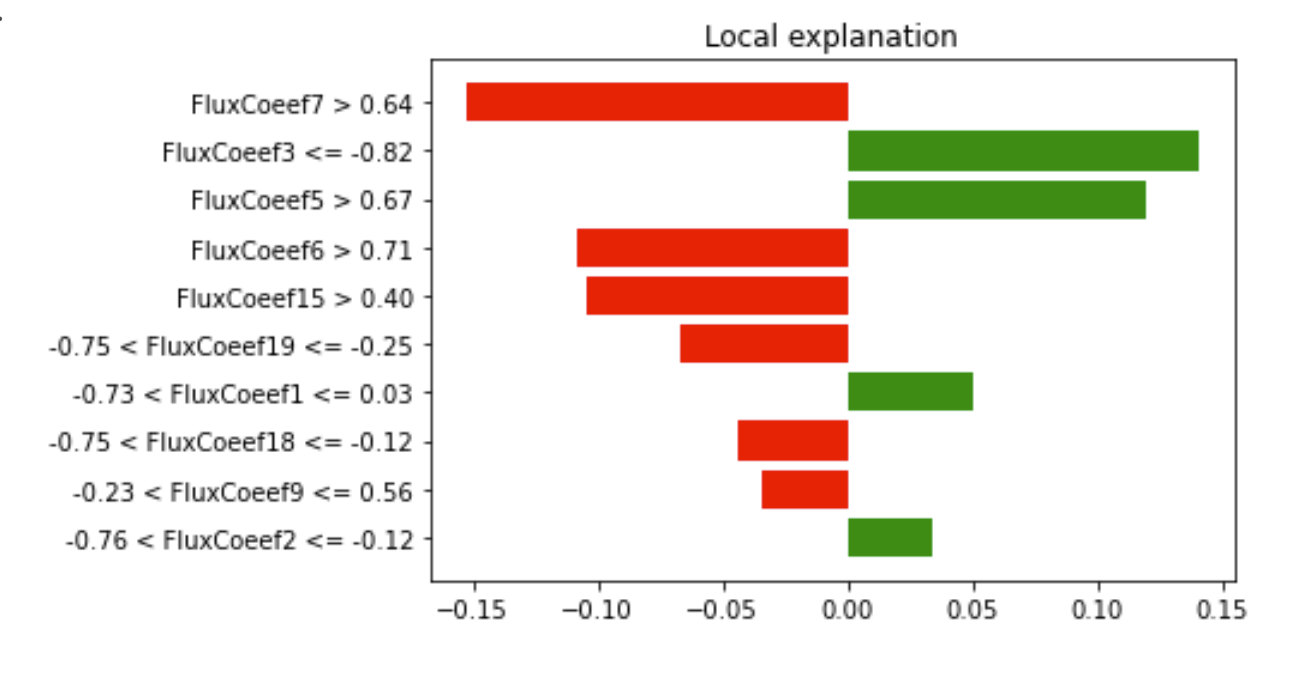

As a result LIME gives a local explanation of the model were it indicates the effect values of features have on the target prediction. Those explanations can be used to discuss the model with domain experts to explain the model and to find issues with either the model or the data are other more fundamental issues.

Since the surrogate model is linear it might not be very precise for highly non-linear models, an indication of the surrogate model’s accuarcy is the \(R^2\) value of the linear regression which can be found as follows

Example of LIME’slocal explanation