11.1 Method: Layer-Wise Relevance Propagation

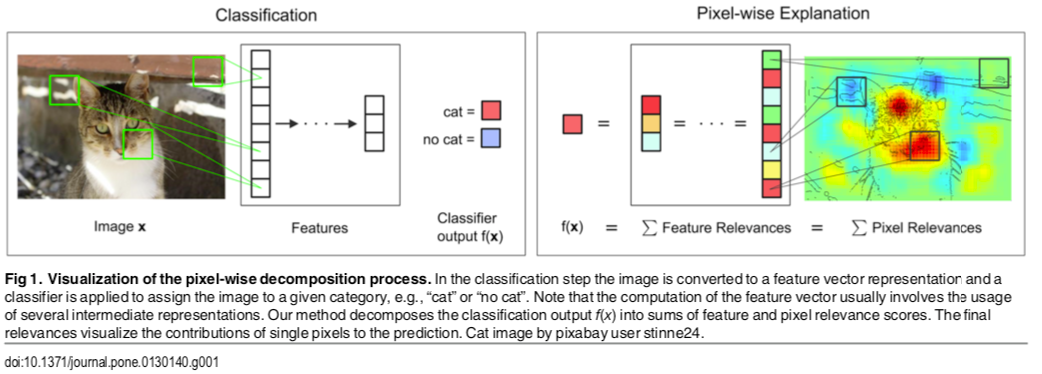

Developed by Fraunhofer Heinrich-Hertz-Institute and TU Berlin is well known method in explainable ML. A detailed description is given in the paper “On Pixel-wise Explanations for Non-Linear Classifier Decisions by Layer-wise Relevance Propagation” (Bach et al. 2015).

First watch the Layer-Wise Relevance Propagation (LRP) at work in the interactive demo of the Fraunhofer Heinrich-Hertz-Institute Explaining Artificial Intelligence, best to be viewed in Chrome Browser

Purpose of LRP:

- Provide explanation of any neural net in domain of input

- Example

- Cancer prediction explanation by LPR

- which pixel contributes to what extend

- Demo Explaining Artificial Intelligence (best viewed in Chrome browser)

- Method can be applied on already trained classifiers

- for text and images

The method can be used on images as well as on text or any other data fed to a neural network. The concept is best shown using an image example as below:

Figure from (Bach et al. 2015)

Basics of LRP:

- Uses weights and and neural activations

- Created by forward-pass (i.e. prediction, not training)

- Go back from prediction to input

- Visualize image pixels which caused high activation

- Drawback

- only one sample explained

- considering several samples \(\implies\) see chapter 11.2

References

Bach, Sebastian, Alexander Binder, Grégoire Montavon, Frederick Klauschen, Klaus-Robert Müller, and Wojciech Samek. 2015. “On Pixel-Wise Explanations for Non-Linear Classifier Decisions by Layer-Wise Relevance Propagation.” PloS One 10 (7).