7.2 Gather data

Gathering data is one of the key aspects of an ML project with three main questions:

Two fundamental questions:

How much data is necessary?

Which data is useful?

What kind of data can be used?

For the first question there are no clear answers, for the second the are plenty of methods to decide whether data is useful or not.

As for the question about the kind of data which can be used, lets get some inspiration in the next section

7.2.2 How much data is necessary?

There are a number of rules of thumb out there like

Rules of thumb:

- For regression analysis

- 10 times as many samples than parameters

- For image recognition

- 1000 samples per category

- can go down significantly using pre-trained models

but those rules a just a rough guidance since there are plenty of factors influencing the data needed.

Basically, it comes down to try and error, i.e. run a model with a given training data set and determine whether or not the performance is satisfying, if not, available options are:

- Gather more training data

- Increase quality of data

- Replacing missing values

- Screen data for wrong labelling

- Add more feature engineering

- Refine pre-processing

- Refine model

For the case at hand an increase of training data was not possible the only option available was model refinement by hyperparameter search for XGBoost and NN.

7.2.2.1 Google AutoML guidelines

For general case, Google provides guidelines to estimate the required amount of training data for its AutoML service.

7.2.2.1.1 Tabular data

The guideline for tabular data can be found at https://cloud.google.com/automl-tables/docs/beginners-guide and is:

- Classification problem: 50 x the number features

- Regression problem: 200 x the number of features

7.2.2.1.2 Vision data

The guideline for vision classification can be found at https://cloud.google.com/vision/automl/docs/beginners-guide and is: Target at least 1000 examples per label.

7.2.2.1.3 Natural language data (NLP)

The guideline for NLP classification can be found at https://cloud.google.com/natural-language/automl/docs/beginners-guide

Train a model using 50 examples per label and then evaluate the results. Add more examples and retrain until you meet your accuracy targets, which could require hundreds or even thousands of examples per label

Factors influencing data requirement:

Factors influencing data requirement:

- model complexity

- similarity of data

- the higher the similarity the less new samples help

- noise on data

- more samples

- more computational effort

- for trees might be counterproductive

Sometimes it is easy to create data. When Ayers was thinking about the title of his new book he targeted Google Ads, each with a different title. He got 250,000 samples related to which ad was clicked on most (Ayres 2007).

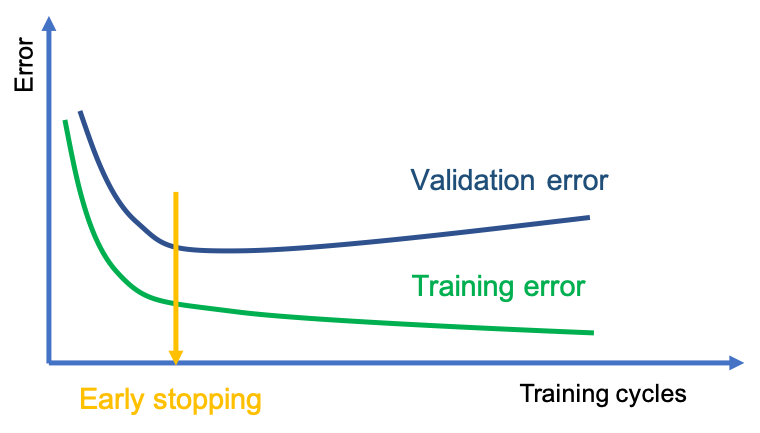

During model training it might become obvious that we run into overfitting, that is the case when training error gets smaller and at the same time the validation error goes up or when the validation error is much higher than the training error.

Overfitting as indicator for not enough data:

- Validation error is much higher than training error

- Validation error increase with training cycles

- Model memorizes dat but doesn’t generalise

7.2.2.2 Dealing with small data TBC

Recent advances in ML reduce the amount of data needed to build meaningful model. Promising concepts presented at https://www.industryweek.com/technology-and-iiot/digital-tools/article/21122846/making-ai-work-with-small-data are listed below.

Concepts to deal with small data:

- Synthetic data generation

- synthesize novel images that are difficult to collect in real life.

- using GANs, variational autoencoders, and data augmentation

- Transfer learning

- using pre-trained model

- add reduced training to specific task

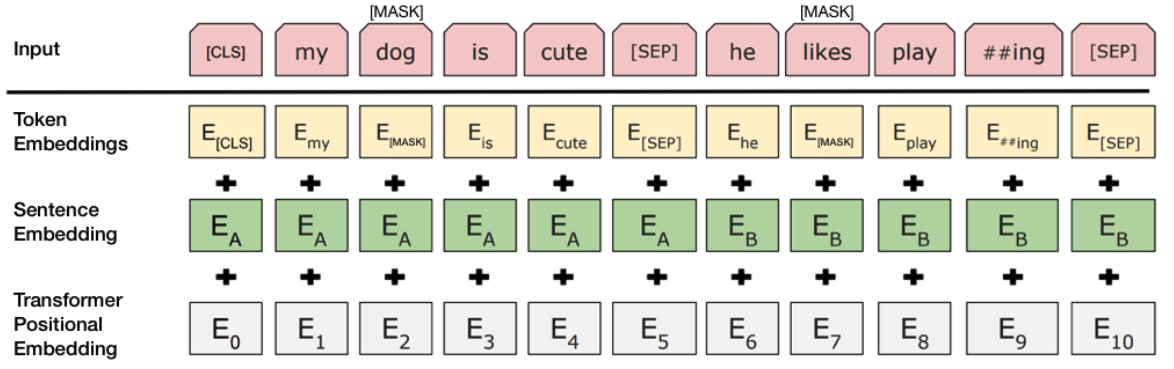

- Self-supervised learning see chapter 8.1.1

- creating labeled data automatically,e.g. masks words in sentence

- Anomaly detection

- model sees zero examples of defect and only examples of OK samples

- algorithm flags anything that deviates significantly from the OK as a potential problem.

- Human-in-the-loop

- start with higher error system

- if confidence is low \(\implies\) show to human expert

- over time model will become better

7.2.3 Which data is useful?

Ideally only data which explain the output are fed into a model. But there might be features which are not known to be of importance. On the other hand there might be features which are overrated as to the importance they have for the output. Anyhow, both can only be known after a model is build. Also, it might be that a feature is valuable for one model but not so much for another model.

Data useful?:

- Could be detected during exploratory data analysis see chapter 7.3

- Has to be tested with model

- Importance can be model dependent

- Not helpful features cause

- performance drop

- more complex models

Finding the importance of a feature falls into the scope of feature engineering as described in chapter 7.4.3