11.5 Tool: tf-explain tbd

tf-explain implements interpretability methods as Tensorflow 2.x callbacks to ease neural network’s understanding. See Introducing tf-explain, Interpretability for Tensorflow 2.0

Documentation: https://tf-explain.readthedocs.io

The code can be found at GitHub

tf-explain:

- Implements interpretability methods as Tensorflow 2.x callbacks

- Several methods to look into different aspects

- Activations Visualization

- Vanilla Gradients

- Gradients*Inputs

- Occlusion Sensitivity

- Grad CAM (Class Activation Maps)

- SmoothGrad

- Integrated Gradients

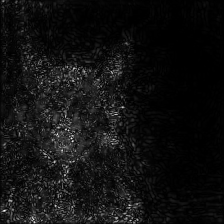

Vanilla Gradients

Visualize gradients on the inputs towards the decision.

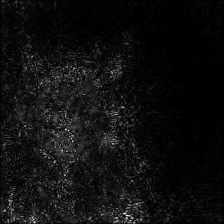

Gradients*Inputs

Variant of Vanilla Gradients ponderating gradients with input values.

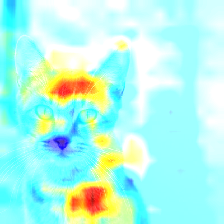

Occlusion Sensitivity

Visualize how parts of the image affects neural network’s confidence by occluding parts iteratively

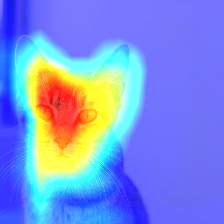

Grad CAM

Visualize how parts of the image affects neural network’s output by looking into the activation maps

SmoothGrad

Visualize stabilized gradients on the inputs towards the decision.

Integrated Gradients

Visualize an average of the gradients along the construction of the input towards the decision.