4.4 Method: Lime tbd

Very well-known technique Local Interpretable Model-agnostic Explanations (LIME) explains prediction for specific input. Details are given in the original paper “Why Should I Trust You?” Explaining the Predictions of Any Classifier (Tulio Ribeiro, Singh, and Guestrin 2016).

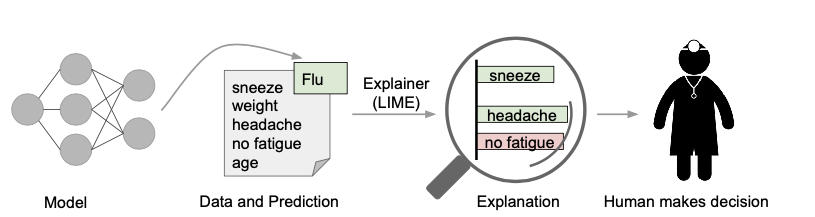

Explaining individual predictions. A model predicts that a patient has the flu, and LIME highlights the symptoms in the patient’s history that led to the prediction. Sneeze and headache are portrayed as contributing to the “flu” prediction, while “no fatigue” is evidence against it. With these, a doctor can make an informed decision about whether to trust the model’s prediction. Figure from (Tulio Ribeiro, Singh, and Guestrin 2016)

References

Tulio Ribeiro, Marco, Sameer Singh, and Carlos Guestrin. 2016. “" Why Should I Trust You?": Explaining the Predictions of Any Classifier.” arXiv Preprint arXiv:1602.04938.